Crop Disease Detection Using YOLOv8

In this project, we are utilizing AI for a noble objective, which is crop disease detection. Well, you're here if you are a geek, or a person looking forward to understanding how deep learning can transform agriculture. In this project, we will guide you on how to design a crop disease detection using YOLOv8 system that is able to process images and videos to detect crop diseases in real-time.

Sounds interesting right? Let us get into it!

Project Overview

Here, you'll discover how to implement an enhanced crop disease identification model based on YOLOv8, which is among the currently leading object detection tools. We will be helping you with how you can install it on Google Colab and take advantage of cloud computing. We will then connect to Roboflow to manage datasets and display a clean and engaging interface with Gradio. The result of this work is going to be a system that can analyze crop images or videos and differentiate diseases. Suppose a farmer can take a picture of his crops and have a diagnosis of the problem in a matter of minutes -that is the future for today's farmer!

Key Feature

Finally, you will have an operational system that takes images or videos and outputs the diseases that are depicted in these images or videos. Farmers, agricultural professionals, or even researchers can employ it in techniques of crop health real time monitoring.

Prerequisites

Before we jump into the code, here's what you'll need:

-

Google Colab Account (Google account free and provide you with free and full GPU access)

-

Roboflow API Key (which is absolutely necessary if you are planning to manipulate the dataset).

-

A good understanding of the Python programming language and passion in Machine learning.

-

Knowledge in the use of YOLOv8 (Ultralytics), roboflow, gradio, numpy,matplotlib,opencv,PIL

Approach

We'll follow a structured approach to build this project:

- Data Collection: We'll be using a dataset of crop images from Roboflow which will consist of images belonging both to diseased and healthy crops.

- Model Training: To this dataset, we will fine-tune the YOLOv8 model to detect the crop diseases accurately.

- Interactive Interface: After that, it will be connected to Gradio so that users can have a nice frontend to upload their own images or videos and see results immediately.

Workflow and Methodology

The overall workflow of this project includes:

- Data collection: We will take a well-structured dataset from Roboflow. To improve the performance of the model, we applied some augmentation techniques in roboflow when downloading the dataset to increase the dataset size.

- Data preprocessing: Following that, the data is imported and then resized to give the model more diversity.

- Train YOLOv8: After that the model is trained through 100 epochs on dataset. The model learns how to recognize diseases like a professional!Test & Evaluate: After the training, we give new data to the model. By doing this the performance is checked and the model is optimized for better accuracy.

- Build the Interface: Finally, we deploy a simple Gradio interface for the user where the user can upload an image or video and the system provides disease detection results.

The methodology involves:

- Data Preprocessing: Preprocessing the collected images by resizing them. It resizes the required input dimensions. Then augmentation technique is applied. It enhances the diversity of training data and prevents overfitting.

- Model Architecture: YOLOv8 is used for real-time object detection. It is trained to locate and classify diseases in crop images.

- Evaluation: Testing the model with unseen crop disease images. Using (mAP) that evaluates the model's performance.

Data Collection

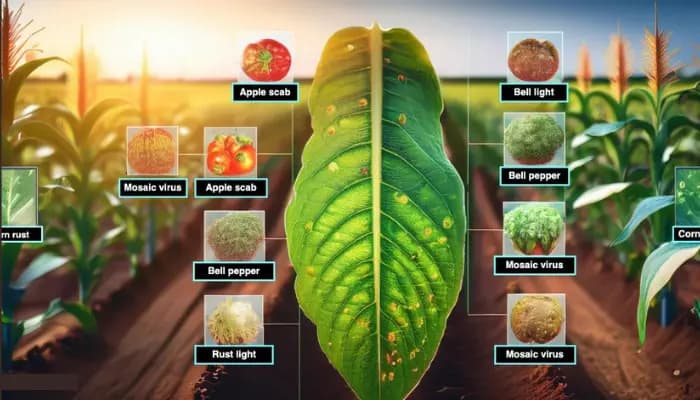

The first and foremost important aspect of this type of project is dataset collection. We are using Roboflow, where we are able to gather, annotate, and collect high quality datasets for object detection tasks like these. The data set that we utilize includes images of fields of the various crops in healthy as well as disease prone health conditions. Possessing such a diverse dataset allows our model to distinguish between healthy plants and the diseases that were previously mentioned.

Data Preparation

- Cleaning the Data: First of all, low-quality and off-topic images can create confusion for the model. Those are filtered out. This is significant in order to eliminate the chances of the model learning from useless data.

- Resizing Images: All images are then resized to YOLOv8 input size which is 640x640 through image resizing. This ensures that the model does not get easily over-fitted, thus enhancing the rate of learning in the network.

- Data Augmentation: To alleviate the effect of overfitting we augment the data by rotating flipping images and also changing their brightness. This makes a more diverse dataset. This means the model can learn to generalize in an enhanced manner based on tests on new data.

Data Preparation Workflow

-

Downloading the dataset from Roboflow: The dataset is collected from Roboflow. It is a platform providing high-quality datasets for machine learning projects.

-

Initializes a Connection to Roboflow Using Your API Key: A connection is established to Roboflow using a unique API key. API keys grant access to the dataset repository and ensure secure data retrieval.

-

Access a Specific Project in the Workspace: The project contains a labeled dataset. It has been created for disease detection, It is named "crops-diseases-detection-and-classification."

-

YOLOv8 Configured Dataset: The 12 version of this dataset is downloaded. It is pre-configured and optimized for use with the YOLOv8 architecture.

Code Explanation

STEP 1:

Connecting Google Drive

You can mount your Google Drive in a Google Colab notebook with this piece of code. This makes it easy to view files saved in Google Drive. In Colab, you can change and analyze data. You can also train models.

from google.colab import drive

drive.mount('/content/drive')Install the necessary packages

Install the roboflow, gradio, and ultralytics Python packages. roboflow is used for managing datasets, and ultralytics is used for working with YOLO models.

!pip install roboflow

!pip install ultralytics

!pip install gradio ultralyticsDownloading the Dataset from Roboflow for Crop Disease Detection

The Roboflow API is initialized using the API key. To download the "crops-diseases-detection-and-classification" dataset for YOLOv8.

from roboflow import Roboflow

rf = Roboflow(api_key="PYIrYvf8WFPOSaItVRYl")

project = rf.workspace("graduation-project-2023").project("crops-diseases-detection-and-classification")

version = project.version(12)

dataset = version.download("yolov8")STEP 2:

Import the necessary packages

The necessary libraries such as OpenCV, matplotlib, and ultralytics are imported to handle image processing, visualization , and model training.

import ultralytics

ultralytics.checks()

from PIL import Image

from ultralytics import YOLO

import gradio as gr

import ultralytics

import numpy as np

from cv2 import imread

from matplotlib import pyplot as plt

from matplotlib.image import imread

import cv2

import gradio as gr