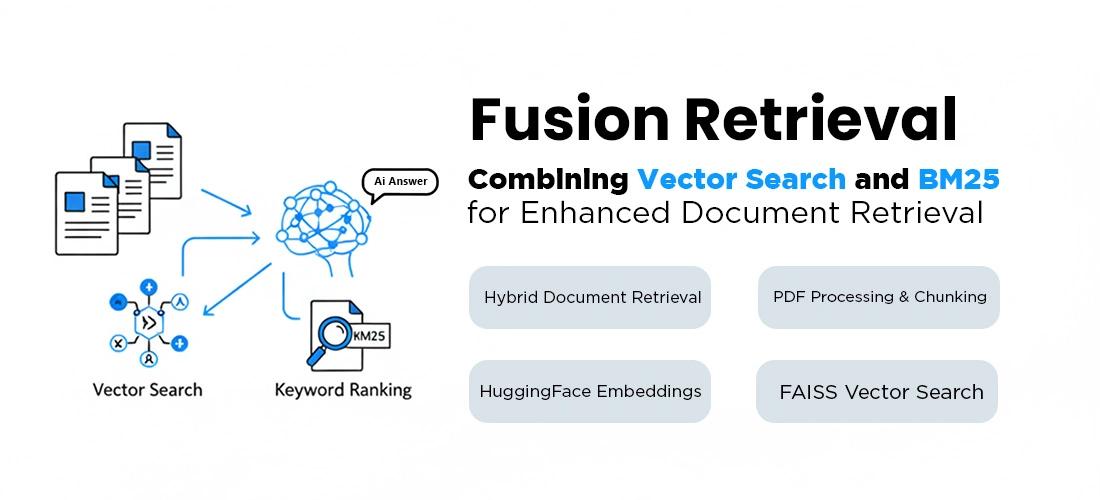

Fusion Retrieval: Combining Vector Search and BM25 for Enhanced Document Retrieval

This project enhances document retrieval by combining semantic search (FAISS) and keyword-based ranking (BM25). It enables efficient search across PDF documents, using vector embeddings and language model-driven content generation for improved accuracy.

Project Overview

The system processes PDFs using PyMuPDF, extracts text and splits it into manageable chunks with LangChain’s RecursiveCharacterTextSplitter. Text chunks are then embedded using Hugging Face’s MiniLM model and stored in FAISS for fast similarity searches. Additionally, BM25 scoring enhances retrieval by ranking documents based on keyword relevance. An LLM-powered hypothetical document is generated using DeepSeek-R1-Distill-Qwen-1.5B to improve responses, refining search results with context-aware insights. Retrieved documents are displayed with citations, making this system ideal for academic research, legal analysis, enterprise knowledge retrieval, and AI-driven Q&A solutions.

Prerequisites

- Google Colab or a local Python environment to run the code.

- Python 3.8+ installed.

- Libraries & Dependencies:

- langchain, langchain-community, langchain-openai, langchain-cohere

- sentence-transformers, faiss-cpu, PyMuPDF, rank-bm25

- openai, transformers, torch, accelerate, pypdf

- Google Drive access (if running on Colab) for storing and retrieving PDFs.

- A pre-trained language model (e.g., DeepSeek-R1-Distill-Qwen-1.5B) for LLM-based generation.

- Basic understanding of FAISS, BM25 and text embeddings for fine-tuning retrieval.

Approach

The system starts by processing PDF documents with PyMuPDF (fitz) to extract the text. This text is then divided into manageable chunks using LangChain’s RecursiveCharacterTextSplitter. To maintain clean formatting, tab characters (\t) are replaced with spaces. The text chunks are transformed into vector embeddings using Hugging Face’s MiniLM model and stored in FAISS, which enables quick semantic similarity searches. Additionally, BM25 ranking is utilized to improve keyword-based retrieval, ensuring that documents are ranked according to both contextual meaning and exact keyword matches. A hybrid retrieval approach merges FAISS-based semantic search with BM25 scoring for better accuracy. To further enhance the results, an LLM-powered hypothetical document is generated using DeepSeek-R1-Distill-Qwen-1.5B, offering context-aware search improvements. Finally, the retrieved documents are presented with citations, ensuring transparency and traceability for research, enterprise search and AI-driven Q\&A applications.

Workflow and Methodology

Workflow:

Step 1: Data Ingestion

- Manually upload PDF files or load them from Google Drive in Colab.

Step 2: Text Extraction & Cleaning

- Utilize PyMuPDF (fitz) to extract text from PDFs.

- Replace tab characters (\t) with spaces for better formatting.

Step 3: Text Splitting & Chunking

- Apply LangChain’s RecursiveCharacterTextSplitter to divide text into manageable chunks while maintaining context.

Step 4: Embedding Generation & Vector Storage

- Convert text chunks into vector embeddings using Hugging Face’s MiniLM model.

- Store these embeddings in FAISS, enabling fast semantic similarity searches.

Step 5: Keyword-Based Retrieval with BM25

- Compute BM25 scores to rank documents based on keyword similarity.

Step 6: Hybrid Retrieval (FAISS + BM25)

- Perform FAISS-based similarity search to retrieve semantically relevant documents.

- Refine search results using BM25 keyword ranking to ensure both semantic and keyword relevance.

Step 7: LLM-Powered Hypothetical Document Generation

- Use DeepSeek-R1-Distill-Qwen-1.5B to generate a hypothetical document based on the user’s query.

- Use this document to enhance retrieval by adding context-aware results.

Step 8: Retrieval with Citations

- Attach source citations to retrieved documents, including page numbers (if available).

- Display results in a structured format for easy interpretation and traceability.

Methodology

- PDF Processing and Text Cleaning: Get text from PDFs and format it by removing a few characters that are not necessary to chunk it into smaller parts.

- Vector Embeddings: Translate and encode these text chunks as packed vectors utilizing MiniLM for embedding conversion.

- FAISS-Based Semantic Search: It embeds the embeddings in FAISS and retrieves them through similarity-based searching.

- BM25 Keyword Matching: The BM25 ranking is used to advance searches by keyword relevance.

- Hybrid Retrieval: A combination of FAISS search in similarity and BM25 scoring to improve accuracy.

- Hypothetical Answer Generation: The DeepSeek-R1-Distill-Qwen-1.5B is employed to produce context-rich documents that enhance retrieval quality.

- Citations of Results: Create source references in retrieved documents for traceability and validation.

Data Collection and Preparation

Data Collection

- Users manually upload PDF files through Google Colab using files.upload().

- Alternatively, files can be loaded from Google Drive by mounting them in Colab.

Data Preparation Workflow

- Upload PDFs via Google Colab or Google Drive.

- Extract text using PyMuPDF (fitz).

- Clean text by removing tab characters (

\t). - Split text into chunks using LangChain’s RecursiveCharacterTextSplitter.

- Generate embeddings with Hugging Face’s MiniLM model.

- Store embeddings in FAISS for fast semantic search.

This process ensures clean, structured and retrievable data for the system.

Code Explanation

Step 1:

Installing Required Libraries

This code installs essential libraries for building an AI-powered retrieval system. It includes LangChain, FAISS, PyMuPDF, Sentence Transformers and Rank-BM25 for text embeddings, vector search and document processing. It also installs PyPDF for handling PDF files.

!pip install langchain langchain-community langchain-openai langchain-cohere sentence-transformers faiss-cpu PyMuPDF rank-bm25 openai transformers torch accelerate

!pip install pypdf

Importing Libraries for Document Processing & AI Retrieval

This code imports essential libraries for PDF reading, text processing and AI-powered retrieval. It uses PyMuPDF (fitz) for PDFs, LangChain for text splitting and embeddings, FAISS for vector storage and BM25 for keyword-based search. It also includes HuggingFace Transformers for LLM-based text generation and retrieval.

import os

import fitz # PyMuPDF for PDF reading

import textwrap

import numpy as np

from typing import List

from langchain.document_loaders import PyPDFLoader

from langchain.text_splitter import RecursiveCharacterTextSplitter

from langchain.embeddings import HuggingFaceEmbeddings

from langchain.vectorstores import FAISS

from rank_bm25 import BM25Okapi

from transformers import AutoModelForCausalLM, AutoTokenizer, pipeline

import torch

Step 2:

Mounting Google Drive

This code mounts Google Drive to Colab, allowing access to files stored in Drive. The mounted directory is /content/drive, enabling seamless file handling.

from google.colab import drive

drive.mount('/content/drive')

Uploading PDF Files in Colab

This code allows manual PDF file uploads in Google Colab. It uses files.upload() to let users select files, then extracts their filenames into pdf_paths for further processing.

from google.colab import files

uploaded = files.upload() # Upload PDF files manually

pdf_paths = list(uploaded.keys()) # Get the uploaded file names

Step 3:

Loading a Pretrained LLM for Text Generation

This code loads the DeepSeek-R1-Distill-Qwen-1.5B model for text generation using Hugging Face. It initializes the tokenizer, loads the model with optimized settings (float16 precision and automatic device mapping) and creates an inference pipeline for generating text.

from transformers import AutoModelForCausalLM, AutoTokenizer, pipeline

import torch

MODEL_NAME = "deepseek-ai/DeepSeek-R1-Distill-Qwen-1.5B" # You can change this

# Load tokenizer & model

tokenizer = AutoTokenizer.from_pretrained(MODEL_NAME)

model = AutoModelForCausalLM.from_pretrained(

MODEL_NAME, device_map="auto", torch_dtype=torch.float16

)

# Create inference pipeline (Streaming Disabled)

llm_pipeline = pipeline("text-generation", model=model, tokenizer=tokenizer) # stream=True removed

Processing PDFs and Creating a FAISS Vector Store

This code processes multiple PDFs into a FAISS vector store for efficient similarity search. It loads PDFs using PyPDFLoader, splits the text into chunks with overlap for better retrieval and cleans the text by replacing tab characters (\t) with spaces. The cleaned text is then converted into embeddings using HuggingFace's MiniLM model and stored in FAISS for fast retrieval. Finally, it confirms the successful creation of the vector store.