Optimizing Chunk Sizes for Efficient and Accurate Document Retrieval Using HyDE Evaluation

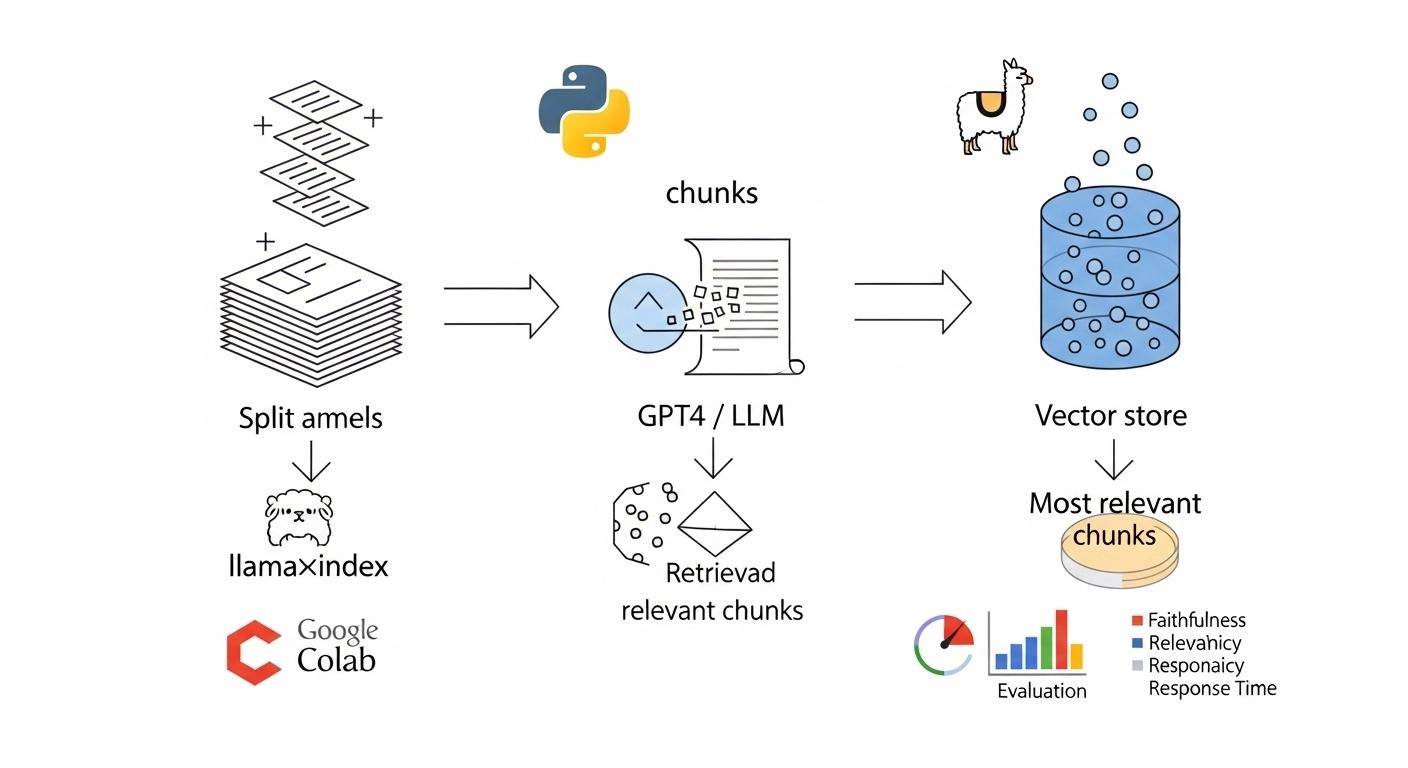

This project demonstrates the integration of generative AI techniques with efficient document retrieval by leveraging GPT-4 and vector indexing. It emphasizes using state-of-the-art libraries such as llama-index and SimpleDirectoryReader to handle large datasets, ensuring the system is both scalable and accurate in processing information.

Project Overview

This project aims specifically to optimize document retrieval by assessing the effect of chunk size on retrieval effectiveness using a query engine powered by GPT-4. The system reads documents from a directory using the llama-index library and SimpleDirectoryReader and generates questions to be evaluated via a dataset generator. It then applies generation through GPT-4, with tailored prompt templates used to evaluate both faithfulness and relevancy. The main sections include vector indexing, async processing with nest_asyncio and performance parameters such as response time, faithfulness and relevancy. Balancing all this makes a sturdy framework evaluation against generative AI applications in document retrieval tasks.

Prerequisites

- Python 3.6+ is required for running the project.

- Required packages: llama-index, langchain-community, langchain-openai

- OpenAI API key configured

- Accessible document directory

- nest_asyncio installed

Approach

This project’s approach involves reading documents from a specified directory and processing them using vector indexing with the llama-index library. It generates evaluation questions through a dataset generator and uses GPT-4 to answer these queries while assessing the responses for faithfulness and relevancy using custom prompt templates. The evaluation iterates over different chunk sizes to measure average response times and accuracy metrics and the results are aggregated into a DataFrame for further analysis, ensuring a comprehensive evaluation of document retrieval performance.

Workflow and Methodology

Workflow

- Load documents from the designated directory using a directory reader.

- Generate evaluation questions from a subset of these documents.

- Set up GPT-4 as the language model and configure vector indexing settings, including chunk sizes.

- Create a vector store index from the loaded documents.

- Process evaluation questions through a GPT-4 powered query engine.

- Measure the response time and evaluate each answer for faithfulness and relevancy.

- Aggregate the performance metrics for different chunk sizes into a results dictionary.

- Convert the results into a DataFrame for analysis and visualization.

Methodology

- Document Chunking: Documents are segmented into chunks with varying sizes to explore the balance between retrieval efficiency and information retention.

- Question Generation: A dataset generator creates evaluation questions from these document chunks to simulate realistic query scenarios.

- Vector Indexing: The segmented documents are organized using vector indexing, facilitating efficient similarity search during retrieval.

- GPT-4 Query Processing: GPT-4 is employed as the query engine to generate responses, leveraging its advanced language understanding.

- Custom Prompt Templates: Tailored prompt templates assess the generated responses for both faithfulness (accuracy of support) and relevancy to the queries.

- Performance Metrics: Quantitative metrics such as average response time, faithfulness and relevancy scores are computed to evaluate performance.

- Comparative Analysis: Results across different chunk sizes are compared and analyzed to identify optimal settings for efficient and accurate document retrieval.

Data Collection and Preparation

Data Collection

The project collects data by sourcing documents from a designated Google Drive folder that houses files related to generative AI projects, particularly those focusing on document retrieval optimization using HyDE evaluation. The data, organized within this centralized drive link, is automatically ingested using the SimpleDirectoryReader, which loads all relevant documents for further processing and analysis.

Data Preparation Workflow

- Load raw documents from the Google Drive folder using SimpleDirectoryReader.

- Automatically ingest all relevant files for processing.

- Segment the documents into chunks with specified sizes and overlaps using vector indexing.

- Generate evaluation questions from a subset of the documents via a dataset generator.

- Randomly sample a defined number of evaluation questions for further analysis.

Code Explanation

Mounting Google Drive

This code mounts Google Drive to Colab, allowing access to files stored in Drive. The mounted directory is /content/drive, enabling seamless file handling.

from google.colab import drive

drive.mount('/content/drive')

Installation Commands Overview

These commands install necessary Python packages: the first installs "llama-index" for managing indexes, the second installs or updates "langchain-community" for language chain components and the third installs "langchain-openai" to integrate OpenAI's language models with your project.

!pip install llama-index

!pip install -U langchain-community

!pip install langchain-openai

Code Setup and API Key Configuration

This code imports necessary libraries, applies asynchronous patches and loads modules from the llama_index package for indexing and evaluation tasks. It then checks for an OpenAI API key from Google Colab or the environment, sets it in the environment variables if available and adds a parent directory to the system path, ensuring the API key is configured correctly for later use.

import nest_asyncio

import random

nest_asyncio.apply()

from llama_index.core import VectorStoreIndex, SimpleDirectoryReader

from llama_index.core.prompts import PromptTemplate

from llama_index.core.evaluation import (

DatasetGenerator,

FaithfulnessEvaluator,

RelevancyEvaluator

)

from llama_index.llms.openai import OpenAI

from llama_index.core import Settings

import os

import time

import sys

import warnings

warnings.filterwarnings("ignore")

try:

from google.colab import userdata

api_key = userdata.get("OPENAI_API_KEY")

except ImportError:

api_key = None # Not running in Colab

if not api_key:

api_key = os.getenv("OPENAI_API_KEY")

if api_key:

os.environ["OPENAI_API_KEY"] = 'Add your Api Key'

else:

raise ValueError("❌ OpenAI API Key is missing\! Add it to Colab Secrets or .env file.")

sys.path.append(os.path.abspath(os.path.join(os.getcwd(), '..')))

print("OPENAI_API_KEY setup completed successfully!")

Document Loading Process

This code defines the directory path where the project’s documents are stored and then uses SimpleDirectoryReader to read and load all the files from that directory into the "documents" variable for further processing.

data_dir = "/content/drive/MyDrive/New 90 Projects/generative_ai_project/Optimizing Chunk Sizes for Efficient and Accurate Document Retrieval using HyDE Evaluation"

documents = SimpleDirectoryReader(data_dir).load_data()

Evaluation Question Generation

This code snippet sets the number of evaluation questions to 25, selects the first 20 documents from the loaded set as evaluation data, uses a dataset generator to create questions from these documents and then randomly picks 25 questions from the generated list.

num_eval_questions = 25

eval_documents = documents[0:20]

data_generator = DatasetGenerator.from_documents(eval_documents)

eval_questions = data_generator.generate_questions_from_nodes()

k_eval_questions = random.sample(eval_questions, num_eval_questions)

GPT-4 Evaluation Setup

This code configures GPT-4 with a temperature of 0 and sets it as the default language model, then creates a new prompt template for evaluating the faithfulness of information by checking if it is directly supported by the context, updates the evaluator with this template and finally initializes a relevancy evaluator for similar tasks.