Computer vision is the most powerful and captivating sort of AI that trains computers to interpret and understand the visual world. Computer vision uses deep learning models to teach computers how to understand the visual environment so they can readily detect items and react appropriately. If you are interested in building your final year project or making your career in computer vision this is the article for you to provide some guidelines and project links.

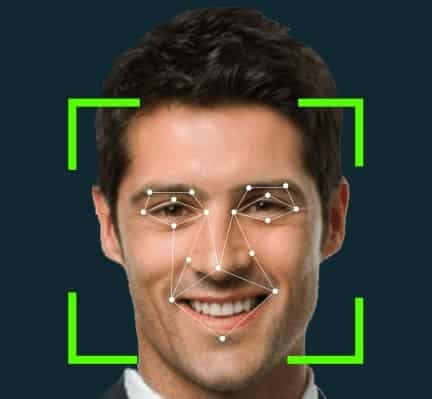

1. Face Recognition

We will learn how to recognize human faces in live video using Python in this project. We'll use python dlib's facial recognition network to create this project. Dlib is a software library that can be used for a variety of purposes. We can create real-world machine learning applications with the dlib toolbox.

Project Source Code: Face Recognition

2. License Plate Detection and Recognition

The project License Plate Detection and Recognition uses detection and OCR techniques to detect the number on a vehicle's license plate. To make your own license plate detection system you can follow this video tutorial or follow this article of another author.

Project Source Code: License Plate Detection And Recognition

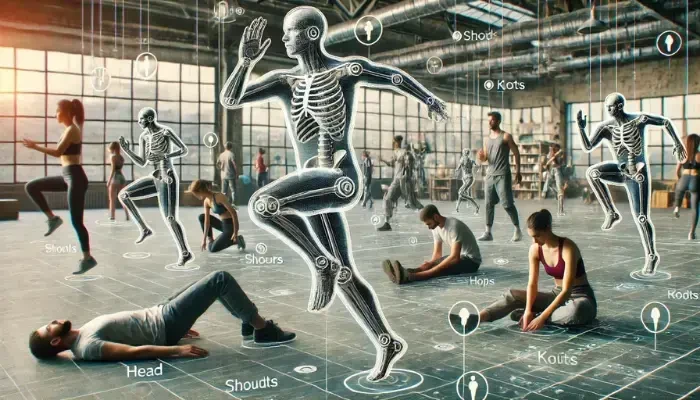

3. Human Pose Detection

Pose estimation is an issue in computer vision where we try to figure out what an object's position and orientation are. Detecting keypoint locations that describe the object is usually what this entails. Deep Learning-based Human Pose Estimation with OpenCV is covered in this lesson. You can follow this article to build your own human pose detection project.

Project Source Code: Real-Time Human Pose Detection Using YOLOv8

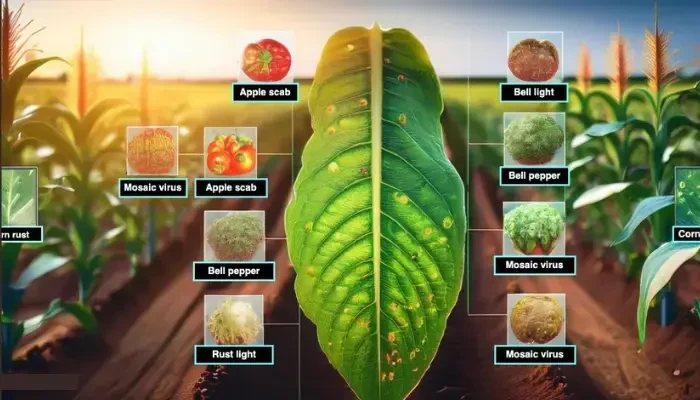

4. Crop Disease Detection Using YOLOv8

This project brings AI into agriculture by detecting crop diseases in real-time using YOLOv8. Farmers can simply upload images or videos of their crops, and the system identifies diseases instantly. The model is trained on a high-quality dataset from Roboflow, ensuring accurate detection of healthy and diseased plants. Gradio provides an easy-to-use interface for users to upload images and receive diagnoses. With Google Colab for training and OpenCV, NumPy, and Matplotlib for processing, this project is fast, scalable, and practical for modern farming.

Project Source Code: Crop Disease Detection Using YOLOv8

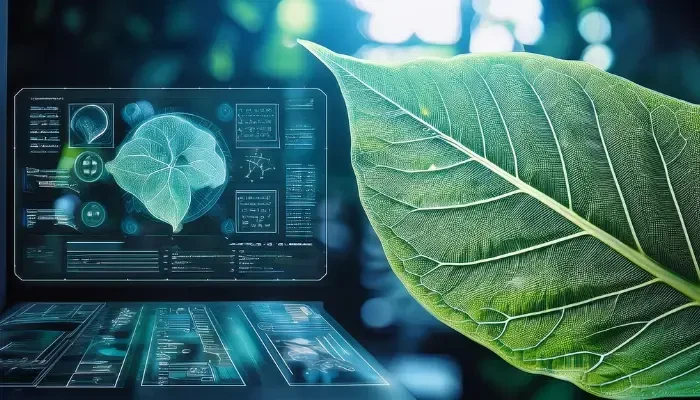

5. Banana Leaf Disease Detection Using Vision Transformer Model

This project helps farmers detect banana leaf diseases early using Vision Transformers and CNNs. Traditional disease detection is slow and manual, but this system automates the process with machine learning and computer vision. The hybrid model captures both local and long-range dependencies in images, improving accuracy. The dataset is sourced from high-quality banana leaf images, labeled for different diseases. The system uses Google Colab, TensorFlow, and Keras, ensuring scalability. With real-time detection and a voting mechanism for better predictions, this AI-driven approach can increase crop yield and improve farm management.

Project Source Code: Banana Leaf Disease Detection Using Vision Transformer Model

6. Image Generation Model Fine-Tuning With Diffusers Models

Ever wanted to create stunning AI-generated images with just a few clicks? This project fine-tunes Diffusers and Stable Diffusion models to generate high-quality images faster and with better precision. By adjusting learning rates, prompts, and model parameters, the system improves image generation efficiency. The fine-tuned model is then converted back to Stable Diffusion format for broader applications. A Gradio interface allows users to input prompts and instantly generate unique images. With Google Colab, Hugging Face, and CUDA optimization, this project blends art and AI seamlessly, making it perfect for creative and AI enthusiasts!

Project Source Code: Image Generation Model Fine-Tuning With Diffusers Models

7. Medical Image Segmentation With UNET

Ever wondered how doctors identify diseases so precisely from MRI, CT, or X-ray images? This project uses U-Net, a powerful deep learning model, to segment medical images and detect regions of interest, such as tumors and organs. U-Net's encoder-decoder architecture ensures high accuracy by preserving image details at the pixel level. The model is trained using publicly available datasets, processed with TensorFlow, Keras, and OpenCV. After training, it predicts segmentation masks, overlaying them on the original images for easy interpretation. This project is a game-changer in medical imaging and diagnosis!

Project Source Code: Medical Image Segmentation With UNET

8. Leaf Disease Detection Using Deep Learning

This project helps farmers detect plant diseases early using deep learning and CNN models like VGG16, VGG19, and EfficientNet-B4. By analyzing leaf images, the system accurately classifies healthy and diseased plants, reducing crop losses and improving yields. The dataset, sourced from Kaggle, includes 8320 training images and 2080 validation images across 26 plant disease categories. Using transfer learning and data augmentation (flipping, rotating, zooming), the model achieves high accuracy. The system is easy to use, scalable, and a great AI-powered solution for modern agriculture.

Project Source Code: Leaf Disease Detection Using Deep Learning

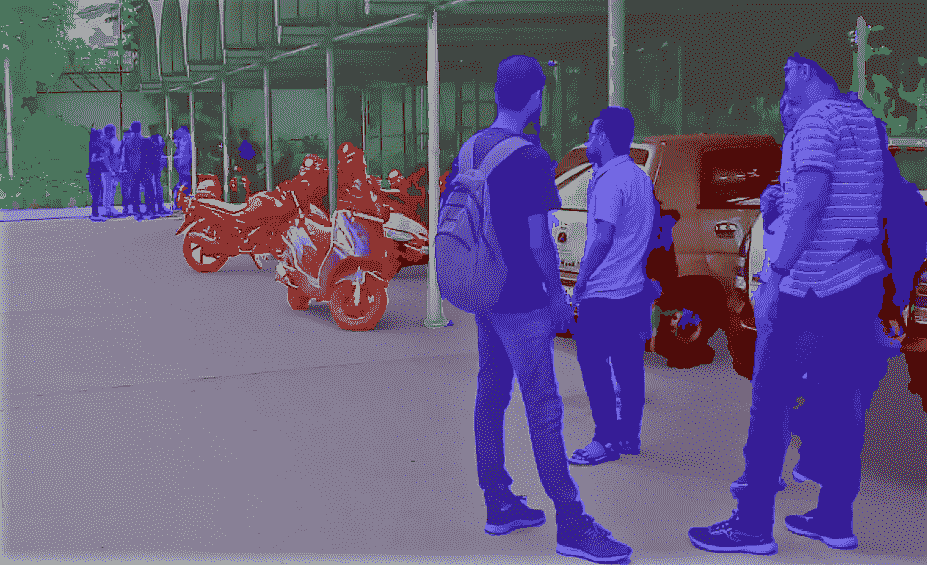

9. Semantic and Instance Segmentation on Videos

From this project, we can learn how to use PixelLib in Python to conduct semantic and instance segmentation on videos with just a few lines of code. PixelLib is a library that may be used to segment images and videos. It's a versatile library designed to make image and video segmentation easy to integrate into software systems.

Project Source Code: Semantic and Instance Segmentation on Videos

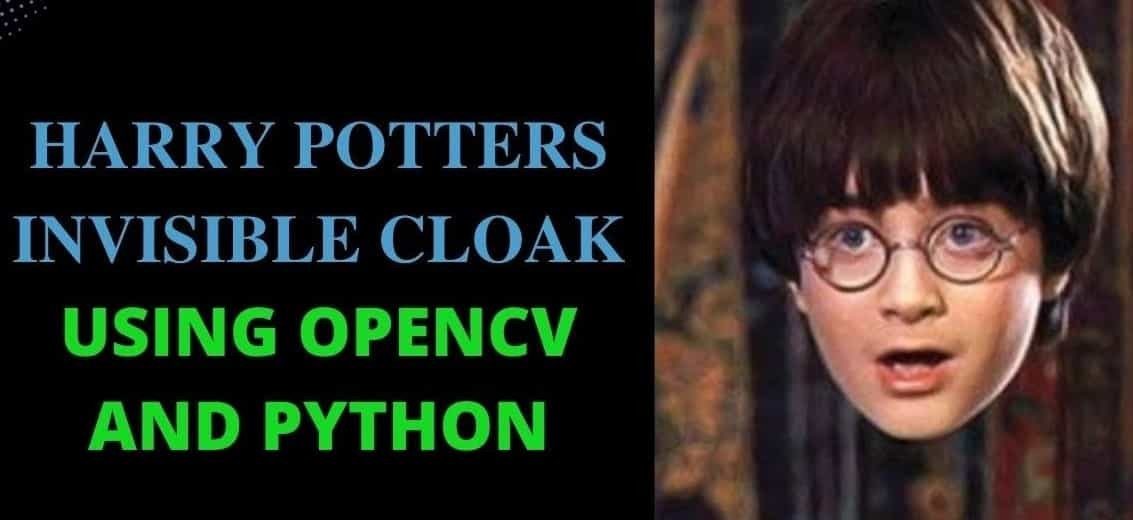

10. Colour Detection & Invisibility Cloak

The goal of this project is to detect color in photographs. It may be used to manipulate and recognize colors in photos and videos. The invisibility cloak is the most well-known project that employs color detection technology. Invisibility in movies is achieved by performing chores on a green screen, but we'll achieve it here by deleting the foreground layer.

Project Source Code: Colour Detection & Invisibility Cloak

11. Vehicle Detection Model

Automated traffic management is one of the most important aspects of a smart city. It utilizes data science skills to create a vehicle identification model that could help with smart traffic management. You can create an automatic car detector and counter model by following this tutorial.

Project Source Code: Vehicle Detection Model

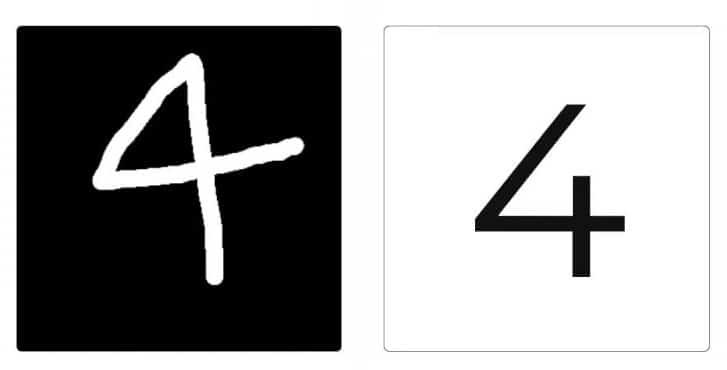

12.

Digit Recognition

The task of recognizing the value presented in an image frame using Deep Learning is known as digit recognition. This digit recognition tutorial predicts the numbers written in the MNIST picture dataset using Python, TensorFlow, and Keras.

Project Source Code: Digit Recognition

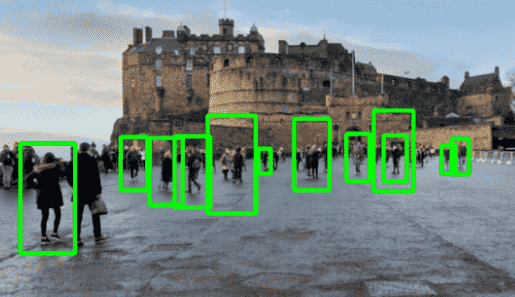

13. Detect Objects in Real-Time

The Viola-Jones algorithm, developed by Paul Viola and Michael Jones, is used to detect objects. Machine learning is used in the aforementioned algorithm. In this article, we'll use one of these Python libraries, OpenCV, to build generic software that can detect any item in a video feed.

Project Source Code: Detect Objects in Real-Time

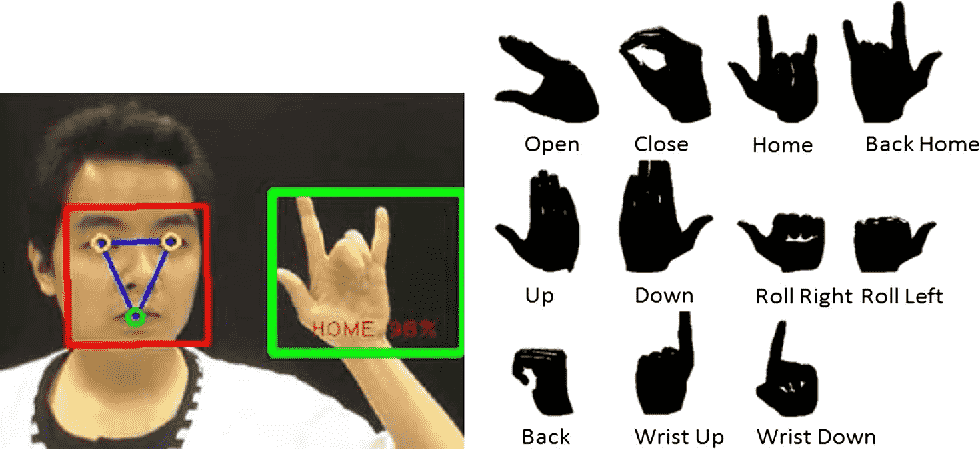

14. Real-time Hand Gesture Recognition

Applications for gesture recognition include virtual environment control, sign language translation, robot control, and music composition. From this article, you will learn to create a real-time Hand Gesture Recognizer utilizing the MediaPipe framework and Tensorflow in OpenCV and Python in this machine learning project on Hand Gesture Recognition.

Project Source Code: Real-time Hand Gesture Recognition

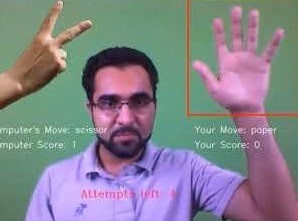

15. Playing Rock, Paper, Scissors with AI

To make a rock, paper, scissors playing system you can follow this article. When it's inside the box, a fine-tuned NASNETMobile model is used to recognize hand signs, and when the model anticipates hand signs, the AI generates its own move at random. The winner of that move is then determined.

Project Source Code: Playing Rock, Paper, Scissors with AI

Now you can choose from a variety of computer vision project ideas for your senior project. This project will also improve your skills and prepare you for the future workforce. If you have any recommendations, please leave a comment below. Your feedback is always valuable to us.