- Introduction to Deep Learning

- Data Preprocessing for Deep Learning

- Convolutional Neural Networks

- Recurrent Neural Networks (RNNs)

- Long Short Term Memory (LSTM) Networks

- Transformers

- Generative Adversarial Networks

- Autoencoder

- Variational Autoencoders

- Diffusion Architecture

- Reinforcement Learning in Deep Learning

- Optimization Algorithms for Deep Learning

- Regularization Techniques

- Model Tracking and Accuracy Analysis

- Hyperparameter Tuning Techniques

- Transfer Learning

- Deployment of Deep Learning Models with REST API

- Deep Learning on Cloud Platforms

- Mathematical Foundations for Deep Learning

Data Preprocessing for Deep Learning | Deep Learning

Data processing is a crucial part of deep learning. We can’t feed the training data into the model without data preprocessing in deep learning. But data doesn’t exist in the appropriate format and quality for the model training.

Real-world data can be noisy, imbalanced, and raw that can’t learn well by any model. So, preprocessing the data makes it appropriate for learning by the model. Today, we will learn the concepts, advantages, techniques, and how to implement data processing in deep learning. Let’s get started.

First, let us know about the topics we will cover today to understand Data Preprocessing deeply.

-

Introduction

-

Data cleaning techniques

-

Data transformation techniques

-

Addressing imbalanced data

-

Data augmentation strategies

-

Effective data splitting and cross-validation

-

Conclusion

Without further discussion, Let’s deep dive into the content.

Introduction

Data preprocessing indicates the process of cleaning and transforming raw data into a suitable format that can be used to effectively train deep learning models. Its aim is to improve the quality and usefulness of the data and ensure that it fulfills the requirements of the deep learning algorithms. In real-world scenarios, data can be formatted in various ways such as numeric data, categorical data, textual data, image data, time series data, audio data, video data, and others. For each data type and deep learning model requirement, the preprocessing technique can be different for getting a learnable model. So, it is needed to understand all the concepts so well.

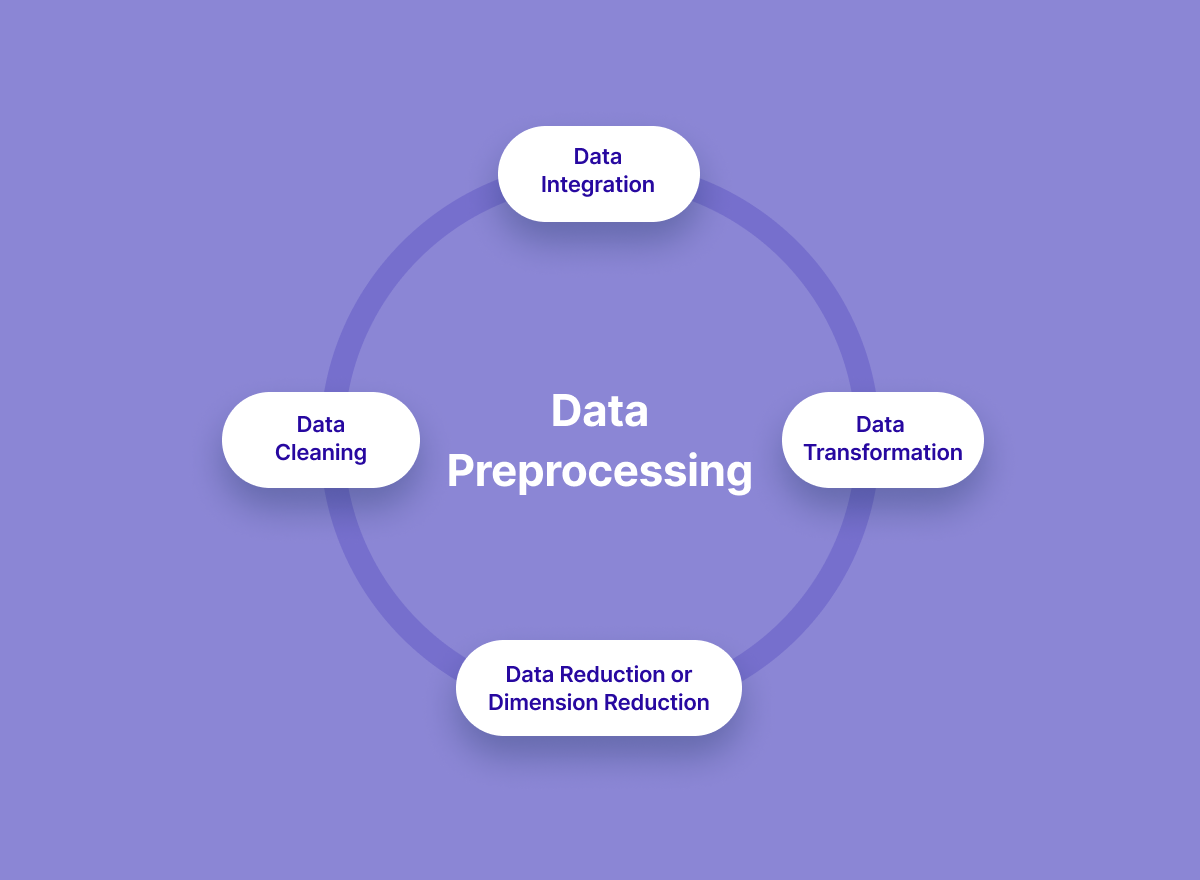

Key Components of Data Preprocessing in Deep Learning

It plays a pivotal role in deep learning workflows by offering several key advantages are given below.

-

Data Cleaning/Data Quality Assurance:

A component of preprocessing, data cleaning (sometimes called data cleansing) is the modification of the data set to address incomplete or missing data, eliminate redundancies, or rectify incorrect data. By identifying and dealing with missing values, outliers, and noise, preprocessing ensures the integrity of the data. It increases the model training process more reliable by enhancing the quality of the data.

-

Data Transformation:

It indicates the changing of the scale or distribution of variables. For numeric and categorical data, it can be normalization, standardization, and encoding. For text data, we can perform tokenization, stemming, vectorization, and others. For image data, we can do resizing, normalization, and others. Like those in the other data types, we can perform other transformation techniques.

-

Feature Engineering:

Data processing makes effective feature engineering possible, which creates new features from existing ones to help the model make better predictions. Though deep learning can automatically extract useful features from raw data, manual feature engineering by data preprocessing can be effective in many cases.

-

Dimensionality Reduction:

Dimensionality reduction decreases the number of characteristics (or "dimensions") in a high-dimensional dataset while retaining as much valuable data as feasible. The main objective of dimensionality reduction is to make the data representation more understandable and user-friendly while maintaining the crucial relationships and patterns in the original data. The number of features can be very high in many real-world datasets, particularly in areas like image processing, natural language processing, genomics, and sensor data.

-

Handling imbalanced Data:

In the classification problem, the classes in the dataset can be imbalanced where the model can learn well from the balanced dataset. To overcome this problem, we can perform oversampling the minority class, undersampling the minority class, or synthetic new data generation.

-

Data Splitting:

The dataset is usually split into training, validation, and testing dataset for training the model and evaluation to prevent underfitting and overfitting. Other techniques, like cross-validation, can be used for getting a robust model.

-

Data Augmentation:

Data augmentation is a common method in machine learning and deep learning to boost a dataset's size and diversity. Data augmentation tries to produce new, slightly altered versions of the original data by applying various transformations to existing data samples. This can increase the generalization and resilience of machine learning models.

-

Normalization & Scaling:

It makes all the input features distributed in the same scale, which is used to improve the performance of the model and converge faster to the minimum loss error. It is also used for using minimum resources for training the model.

-

Handling Categorical Data:

For handling categorical data, we should convert the category into numerical values. For that, we can use one-hot encoding, label encoding, and other preprocessing techniques.

-

Efficient Model Training:

By reducing unnecessary computations and memory usage, effective data preprocessing improves computational efficiency. It makes the training process easier, which makes the model convergence faster and better.

These are the techniques for data preprocessing for effective model training. Now let’s learn about how a deep learning engineer follows the step-by-step guidelines for effectively preprocessing the data.

Step-by-Step Process for Effective Data Preprocessing

First, you have to understand the data types of the dataset and which data preprocessing technique can be done on that data type. For data preprocessing perform data cleaning techniques for handling missing data, noise, and outliers. If the dataset is high dimensional, then you can reduce the unnecessary or redundant features from the dataset. If the dataset is imbalanced, then try to make the dataset balanced. For increasing the diversity of the dataset, data augmentation can be performed on the existing data. Then perform data transformation techniques to make the dataset trainable by the algorithms and faster convergence. Then automatic feature engineering or manual feature engineering can be performed for enhancing the model performance. Split the dataset for training and evaluation. Then try to reduce unnecessary computations and memory usage during the training process. This is the overall idea for almost all the problems in data preprocessing.

Understanding the challenges posed by raw data in deep learning

Raw data refers to the original, unprocessed data that has been collected and not prepared for use in model training. This data may be messy, and contain errors, inconsistencies, missing values, and outliers. It can come from various domains such as text, images, audio, time series, tabular data, numerical, and categorical data. This format of data is not suitable for a deep learning model to learn effectively. Text data may contain spelling errors, abbreviations, emojis, various languages, and so on. Images could be in various formats, sizes, and orientations and may contain noise, varied lighting conditions, unnecessary information, and so on. Audio might have varied volume levels, background noise, different sample rates, long silences, and so on. Time series data may contain outliers, missing values, varying intervals between measurements, and so on. Tabular data may contain missing values, various data types, irrelevant features, and so on. The raw data can be imbalanced, and high dimensional with different scales of the features, sequential dependencies, sensitive information, and so on. So this type of raw data is not suitable for model training without data preprocessing.

Benefits of Data Preprocessing

Data processing plays a profound effect on the deep learning model’s performance. Data preprocessing maximizes the ability of the model to learn effectively. Some key features are given below.

-

Clean and preprocessed data enhance the accuracy of the models.

-

Data preprocessing reduces the risk of overfitting problem

-

It helps to converge the model faster by normalization and standardization. So it speeds up the training process.

-

Improves robustness of the model

-

Handles imbalanced data

-

Enhance the interpretability of the mode performance.

Overall, Data preprocessing is an important technique for improving the accuracy, robustness, and interpretability of the model.

Data Cleaning Techniques

Data cleaning refers to a process of detecting and correcting or removing corrupt, inaccurate, incomplete, or irrelevant parts of the data in a dataset. It improves the quality of the data before feeding it into a model. Some of the common techniques of data cleaning are given below.

-

Handle missing value: Missing data is a common problem in any dataset that can degrade the model performance. There are some ways to handle missing data in the dataset given below.

-

Remove duplicates: Duplicate rows can lead to biases in the model and can poorly perform on unseen data. So, it is needed to identify and remove.

-

Outlier detection and removal: Outliers are data points that differ from other observations. Outliers can affect the performance of the model. There are several techniques for handling outliers including statistical methods like Z-score, IQR methods, or machine learning methods like Isolation forest.

-

Noise reduction: Noise is random fluctuations in data that can interfere with the learning process. To reduce the noise from the dataset, there are some smoothing techniques like moving averages, low-pass filtering, polynomial smoothing, and others.

-

Data formatting: The machine can’t understand all the data types. So, we should ensure that data is in a consistent and suitable format for the model. This involves converting data types, fixing data format, standardizing other data, and so on.

Implement Data Cleaning

For performing the data cleaning operation, let's first import the necessary libraries. You will get the full code in Google Colab also.

# Import necessary libraries

import pandas as pd # pandas library is used for data manipulation and analysis

import numpy as np # numpy library is used for numerical computations and array operations

from scipy import stats # scipy library provides various statistical functions and operations

data = {

'A': [1, 2, np.nan, 4, 5, 5],

'B': [6, 7, 8, np.nan, 10, 10],

'C': [11, 12, 13, 14, 15, 160]

}

df = pd.DataFrame(data)

This dummy dataset contains some missing values marked as np.nan. We can either remove the rows containing the missing values or we can replace them with another number i.e. mean of the column. After that, we will remove the duplicate values from the columns. We need to handle the outliers as they can degrade the performance of the. Then we will smooth out the values with noise reduction. Finally, we will convert all the data types of the columns to numerical values.

# Handling missing values: # Option 1: Remove rows with missing values df_no_missing = df.dropna() # Option 2: Fill missing values - this could be with a constant or mean/median/mode of the column df_filled_na = df.fillna(df.mean()) # filling with mean # Removing duplicates

df_no_duplicates = df.drop_duplicates()

# Removing Outliers

df_no_outliers = df[(np.abs(stats.zscore(df)) < 3).all(axis=1)] # change '3' to a different value to adjust the threshold # Noise Reduction df_smoothed = df.rolling(window=2).mean() print("\nDataFrame after noise reduction:") print(df_smoothed) # Data Formatting (Let's convert the data types of the columns) df['A'] = df['A'].astype('float') df['B'] = df['B'].astype('float') df['C'] = df['C'].astype('int')

These are the overall techniques for preprocessing data cleaning in deep learning workflows. There may be some other ways to clean and structure the data for creating suitable training models.

Data Transformation Techniques

Data transformation is a vital part of data preprocessing that allows the model to learn more effectively. It is the process of converting data from one format or structure to another format or structure. There are many techniques for various purposes. Among them, some common techniques are given below.

-

Scaling:

Generally, the features of the dataset may contain different scales. The model can’t learn well on that dataset. So scaling ensures that all the features will have the same scale. There are some techniques on this perspective given below.

-

Normalization:

It is almost the same as scaling but it is used in the entire dataset rather than individual features. It ensures that all the features are on a comparable scale. It leads to faster convergence of models during training. It helps optimize data preprocessing the optimization algorithms like gradient descent and prevents issues like vanishing gradients and exploding gradients. It can improve the effectiveness of the regularization technique and also increase the robustness of the models to outliers. L2_norm is a common technique for normalization.

-

One hot encoding:

It converts categorical values into a binary form. For each unique value in the categorical value, a new binary column is created.

-

Label encoding:

Label encoding allows us to represent ordinal variables with numerical values, enabling data preprocessing algorithms to understand the relative ordering or ranking of the categories. It is suitable for variables where the order of categories holds significance. Suppose we have 3 values in the target feature(good, better, best), then label encoding converts the 0,1,2.

-

Generating new data from existing ones:

Generating new features from existing ones is a common practice in machine learning to enhance models' performance and predictive power. It involves creating additional features by combining, transforming, or extracting information from the existing ones. Apply mathematical operations such as logarithmic, exponential, or power transformations to the existing features.

These techniques enhance model performance, interpretability, and computational efficiency.

Implement Data Transformation

We will now work on transforming the dataset. Let's import the necessary libraries-

# Importing pandas library as 'pd' to work with data frames

import pandas as pd

# Importing numpy library as 'np' for numerical operations

import numpy as np

# Importing preprocessing module from scikit-learn for data preprocessing tasks

from sklearn import preprocessing

# Importing PCA (Principal Component Analysis) from scikit-learn for dimensionality reduction

from sklearn.decomposition import PCA

data = {

'A': [1, 2, 3, 4, 5],

'B': [6, 7, 8, 9, 10],

'C': ['cat', 'dog', 'cat', 'dog', 'rat'],

'D': ['red', 'blue', 'red', 'green', 'blue']

}

df = pd.DataFrame(data)First of all we scale the dataset to make it uniform between a range. Then we will normalize the data. We need to encode the categorical data into numerical values. We can generate new features if we see the dataset does not contain enough features. On the other hand, we have to reduce the features if the dataset contains way too many features.

# 1. Scaling scaler = preprocessing.StandardScaler() # using standard scaler, mean=0, std=1 scaled_df = scaler.fit_transform(df[['A', 'B']]) # assuming 'A', 'B' are numeric features print("Scaled DataFrame:\n", pd.DataFrame(scaled_df, columns=['A', 'B'])) # 2. Normalization normalized_df = preprocessing.normalize(df[['A', 'B']], norm='l2') # L2 norm print("\nNormalized DataFrame:\n", pd.DataFrame(normalized_df, columns=['A', 'B'])) # 3. One-hot encoding onehot = preprocessing.OneHotEncoder() onehot_df = onehot.fit_transform(df[['C']]).toarray() # assuming 'C' is a categorical feature print("\nOne-hot Encoded DataFrame:\n", pd.DataFrame(onehot_df, columns=onehot.get_feature_names(['C']))) # 4. Label encoding le = preprocessing.LabelEncoder() df['D'] = le.fit_transform(df['D']) # assuming 'D' is a categorical feature print("\nDataFrame after Label Encoding:\n", df) # 5. Generating new features df['E'] = df['A'] + df['B'] # generate a new feature 'E' that's the sum of 'A' and 'B' print("\nDataFrame after Generating New Features:\n", df) # 6. Dimensionality reduction (PCA) pca = PCA(n_components=2) # reduce to 2 dimensions pca_df = pca.fit_transform(df[['A', 'B', 'E']]) # perform PCA on numeric features 'A', 'B', and 'E' print("\nDataFrame after PCA:\n", pd.DataFrame(pca_df, columns=['PC1', 'PC2']))

Addressing Imbalanced Data

An imbalanced dataset is a common problem in classification problems where the classes are not equally represented. It can make the model biased towards the majority class, resulting in poor performance of the minority class. Some techniques that can be taken to handle imbalanced datasets are given below.

-

Under-sampling: This indicates reducing the number of samples from the majority class to keep balance among all the target classes. It can help to balance the classes but may lose valuable information.

-

Over-sampling: It increases the number of samples in the minority classes by randomly replicating them. The disadvantage is it may occur overfitting because of duplicating points.

-

SMOTE(Synthetic Minority Over-Sampling Technique): It is an improvement of over-sampling. It generates synthetic examples for the minority class instead of creating exact copies.

-

Ensemble methods: Some algorithms like bagging and boosting can be useful to handle imbalanced datasets. In specially balanced random forests, Easy ensembles are designed to handle imbalanced datasets.

-

Cost-Sensitive training: This involves giving a higher penalty to misclassified minority class instances.

-

Use appropriate Evaluation metrics: All the evaluation metrics are not appropriate for imbalanced datasets. You can use ROC-AUC, precision, recall, and F1-score is better for imbalanced datasets.

Preprocessing Imbalanced Dataset

Let's process imbalanced datasets with code-

# Importing pandas library as 'pd' to work with data frames

import pandas as pd

# Importing train_test_split function from scikit-learn to split the dataset into training and testing sets

from sklearn.model_selection import train_test_split

# Importing SMOTE (Synthetic Minority Over-sampling Technique) and RandomOverSampler for oversampling the minority class

from imblearn.over_sampling import SMOTE, RandomOverSampler

# Importing RandomUnderSampler for undersampling the majority class

from imblearn.under_sampling import RandomUnderSampler

# Importing Pipeline from imblearn to create a data preprocessing and modeling pipeline

from imblearn.pipeline import Pipeline

# Importing RandomForestClassifier from scikit-learn as the machine learning model

from sklearn.ensemble import RandomForestClassifier

# Importing confusion_matrix, accuracy_score, and f1_score from scikit-learn for model evaluation

from sklearn.metrics import confusion_matrix, accuracy_score, f1_score

# Importing class_weight from scikit-learn to handle class imbalance in the model

from sklearn.utils import class_weight

# Assume we have a binary classification problem and df is our DataFrame

# 'target' is the column with the target variable

X = df.drop('target', axis=1)

y = df['target']

# Split the data into train and test sets

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

To handle imbalanced datasets, either balance the datasets by oversampling, undersampling, or SMOTE or Use appropriate algorithms, appropriate evaluation metrics, and cost-sensitive training. I hope this will help you handle the imbalanced dataset effectively. We can use several methods to oversample or undersample the data if necessary. We can employ other techniques like SMOTE or ensemble methods.

# 1. Under-sampling under_sampler = RandomUnderSampler(sampling_strategy='majority') X_train_under, y_train_under = under_sampler.fit_resample(X_train, y_train) # 2. Over-sampling over_sampler = RandomOverSampler(sampling_strategy='minority') X_train_over, y_train_over = over_sampler.fit_resample(X_train, y_train) # 3. Define SMOTE smote = SMOTE() X_train_smote, y_train_smote = smote.fit_resample(X_train, y_train) # 4. Ensemble methods (Random Forest Classifier as an example) classifier = RandomForestClassifier() model = Pipeline([('SMOTE', smote), ('Random Forest Classifier', classifier)]) model.fit(X_train, y_train) predictions = model.predict(X_test) # 5. Cost-Sensitive training weights = class_weight.compute_class_weight('balanced', np.unique(y_train), y_train) weight_dict = {class_label: weight for class_label, weight in zip(np.unique(y_train), weights)} classifier_weighted = RandomForestClassifier(class_weight=weight_dict) classifier_weighted.fit(X_train, y_train) # 6. Use appropriate Evaluation metrics # Assume we have predictions from a classifier print("Confusion Matrix:\n", confusion_matrix(y_test, predictions)) print("Accuracy Score:\n", accuracy_score(y_test, predictions)) print("F1 Score:\n", f1_score(y_test, predictions))

Data Augmentation Strategies

Generally, deep learning can learn better and becomes more robust on big and diverse datasets. A data augmentation strategy is used to increase the number and diversity of the data available for training models. Without actually collecting new data, the existing data is used to create transformed versions of the original data points. The techniques for data augmentation depend on the data types.

For image data type, we can perform flipping horizontally or vertically, rotation by a certain angle, scaling by increasing or decreasing the size of the data, cropping, translation, noise injection by adding random noise, brightness, and contrast adjustments by changing the lighting conditions and many more.

For text data type, we can replace words by their synonyms, randomly delete words, randomly swap two sentences, translate the sentence into another sentence, and translate it back.

For audio data, we can add background noise to the audio, change the pitch of the sound, speed up or slow down the sound, and others. For tabular datasets, we can create synthetic examples in the feature space by SMOTE, add random noise to the numeric features, and so on. Like this, in the other data types, we can perform several techniques for increasing the number of samples and diversity of the dataset, leading to a more robust model.

Effective Data Splitting and Cross-Validation

Data splitting and cross-validation are used to split the dataset for training, validation, and testing of the model. They ensure that the model can generalize well or not. It is very important for accessing the performance of the model, tuning hyperparameters, and avoiding overfitting. Today we will discuss two methods of splitting that are given below.

Data splitting

Here the datasets can be split into three sets including the training dataset, validation dataset, and testing dataset. The training set is used to train the model, the validation set is used to tune the hyperparameters, avoid overfitting and make decisions on the model architecture, and the last test set is used to evaluate the final model. You can split the dataset in any proportion but the common split for training: validation: testing is 70%: 15%: 15% or 80%:10%:10%.

Cross-Validation

Data splitting techniques are not so robust for generalizing on unseen data. It may fail to represent all data distribution in a split. That’s why cross-validation comes into place that provides a more robust estimate of the model performance. Some techniques of cross-validation are given below.

-

K fold cross validation: The dataset is divided into ’K’ subsets of equal size and the model is trained for K times where each time a different subset is used for the validation set and the remaining K-1 is for training sets. The average performance metric across all ‘k’ trails is used as the overall performance estimate.

-

Stratified K fold cross validation: It is a variant of the K fold that is used at the time of the imbalance dataset. It ensures that each fold contains almost the same percentage of samples of each target class.

-

Leave one out cross-validation: it is a very special type of K fold where K equals the total number of samples in the dataset. It is very computationally expensive.

-

Time series cross-validation: It maintains the temporal dependency between observations. This approach can be used when the validation set always comes after the training set.

Implement Data splitting and cross-validation

Let's implement the data splitting and cross-validation techniques with code-

# Simple data splitting from sklearn.model_selection import train_test_split X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

We can use various types of k-fold cross-validation. Also, we can employ other strategies like Leave One Out cross-validation if necessary .# K-fold cross-validation

from sklearn.model_selection import KFold

kfold = KFold(n_splits=5)

for train_index, test_index in kfold.split(X):

X_train, X_test = X.iloc[train_index], X.iloc[test_index]

y_train, y_test = y.iloc[train_index], y.iloc[test_index]

# Stratified K-fold cross-validation

from sklearn.model_selection import StratifiedKFold

stratified_kfold = StratifiedKFold(n_splits=2)

for train_index, test_index in stratified_kfold.split(X,y):

X_train, X_test = X.iloc[train_index], X.iloc[test_index]

y_train, y_test = y.iloc[train_index], y.iloc[test_index]

# Leave one out cross-validation

from sklearn.model_selection import LeaveOneOut

loo = LeaveOneOut()

for train_index, test_index in loo.split(X):

X_train, X_test = X.iloc[train_index], X.iloc[test_index]

y_train, y_test = y.iloc[train_index], y.iloc[test_index]

# Time series cross-validation

from sklearn.model_selection import TimeSeriesSplit

tscv = TimeSeriesSplit(n_splits=2)

for train_index, test_index in tscv.split(X):

X_train, X_test = X.iloc[train_index], X.iloc[test_index]

y_train, y_test = y.iloc[train_index], y.iloc[test_index]

Conclusion

Data preprocessing is a significant part of deep learning workflows. Not only does it clean, transform, and organize the raw data but also it enhances the learning quality and efficiency of the deep learning algorithms. Depending on the variety of the dataset structure and problem domain, there are a lot of data preprocessing techniques, including data cleaning, transformation, normalization, augmentation, handling of imbalanced datasets, and many more. Data preprocessing ensures the meaningful representation of data. Techniques such as cross-validation and effective data-splitting strategies ensure robust model training and evaluation. Different datasets and problem types require different strategies. It is an iterative, flexible, and very important part of deep learning workflows.

Future advancements and emerging trends in data preprocessing for deep learning include automated processing techniques, deep learning-specific specific-processing techniques, handling unstructured data, transfer learning for preprocessing, exploitability and interpretability in data preprocessing, privacy-preserving processing, dynamic and adaptive processing, and integration of domain knowledge. These advancements aim to improve the efficiency and effectiveness of data processing for deep learning models, ensuring better model performance, interpretability, and adaptability. By embracing these trends, deep learning engineers can stay at the forefront of data preprocessing techniques and optimize their models for enhanced results.

Hope you have enjoyed the article, and Thanks for reading it.