- Getting Started with Generative Artificial Intelligence

- Mastering Image Generation Techniques with Generative Models

- Generating Art with Neural Style Transfer

- Exploring Deep Dream and Neural Style Transfer

- A Guide to 3D Object Generation with Generative Models

- Text Generation with Generative Models

- Language Generation Models for Prompt Generation

- Chatbots with Generative AI Models

- Music Generation with Generative Models

- A Beginner’s Guide to Generative Design

- Video Generation with Generative Models

- Anime Generation with Generative Models

- Generative Adversarial Networks (GANs)

- Generative modeling using Variational AutoEncoders

- Reinforcement Learning for Generation

- Interactive Generative Systems

- Fashion Generation with Generative Models

- Story Generation with Generative Models

- Character Generation with Generative AI

- Generative Modeling for Simulation

- Data Augmentation Techniques with Generative Models

- Future Directions in Generative AI

Anime Generation with Generative Models | Generative AI

Introduction

Because they provide previously unheard-of capacity to create a wide variety of characters and scenarios, generative models have completely changed the anime development environment. This course gives amateurs and academics the tools to explore the exciting field of generative model anime creation in a clear and straightforward manner, covering everything from basic ideas to sophisticated approaches.

Importance of Anime Generation

Anime generation using generative models is pivotal as it democratizes content creation, enabling artists to produce high-quality anime efficiently. This technology fosters innovation, personalization, and collaboration within the anime community, revolutionizing the way content is created and consumed.

Let’s dive into these Anime Generation with Generative Models

- Diffusion-based text-to-image generative model

Overview Anime Generation Using Animagine XL 3.0 (Diffusion-based text-to-image generative model)

The open-source anime text-to-picture model, Animagine XL 3.0, has been improved with respect to image production, hand anatomy, tag ordering, and idea interpretation. This model, which prioritizes learning principles above aesthetics, is the most sophisticated in its series.

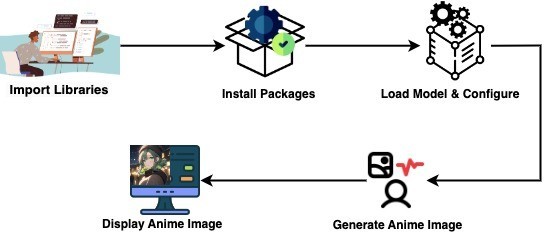

The Workflow:

Implementation of Anime Generation Using Animagine XL 3.0

Let’s go through a simple code to understand things better:

Step 1: Install Library

!pip install -q --upgrade diffusers invisible_watermark transformers accelerate safetensors

Step 2: Import Libraries

import torch

from torch import autocast

import matplotlib.pyplot as plt

from PIL import Image

from diffusers import StableDiffusionXLPipeline, EulerAncestralDiscreteScheduler

Step 3: Model Initialization

model = "linaqruf/animagine-xl"

Step 4: Efficient Initialization of Stable Diffusion XL Pipeline

The code initializes a Stable Diffusion XL pipeline from a pre-trained model, optimizing it for efficiency by using float16 data type, enabling safe tensor operations, and selecting the fp16 variant for reduced precision computing.

pipe = StableDiffusionXLPipeline.from_pretrained(

model,

torch_dtype=torch.float16,

use_safetensors=True,

variant="fp16",

)

Step 5: Optimizing Pipeline Execution on CUDA

The code configures the scheduler and moves the pipeline to a CUDA-enabled device for faster execution. This optimization likely improves the speed and efficiency of image generation, particularly beneficial for tasks like anime-style image generation.

pipe.scheduler = EulerAncestralDiscreteScheduler.from_config(pipe.scheduler.config)

pipe.to('cuda')

Step 6: Generate Anime Image

The code generates a high-quality anime-style image of a cute girl with green hair outdoors at night. It follows specific prompts while avoiding undesirable qualities such as low resolution and bad anatomy. The resulting image is saved as "anime_girl.png".

prompt = "face focus, cute, masterpiece, best quality, 1girl, green hair, sweater, looking at viewer, upper body, beanie, outdoors, night, turtleneck"

negative_prompt = "lowres, bad anatomy, bad hands, text, error, missing fingers, extra digit, fewer digits, cropped, worst quality, low quality, normal quality, jpeg artifacts, signature, watermark, username, blurry"

output = "/content/anime_girl.png"

image = pipe(

prompt,

negative_prompt=negative_prompt,

width=1024,

height=1024,

guidance_scale=12,

target_size=(1024,1024),

original_size=(4096,4096),

num_inference_steps=50

).images[0]

image.save(output)

image = Image.open(output)

plt.imshow(image)

plt.axis('off') # to hide the axis

Generated Output Anime Image:

Conclusion

Generative models have revolutionized anime creation, making it more accessible and innovative. With tools like Animagine XL 3.0 and efficient optimization techniques, creators can produce high-quality anime-style images efficiently. This advancement fosters collaboration and exploration within the anime community, driving creativity to new heights.