- Getting Started with Generative Artificial Intelligence

- Mastering Image Generation Techniques with Generative Models

- Generating Art with Neural Style Transfer

- Exploring Deep Dream and Neural Style Transfer

- A Guide to 3D Object Generation with Generative Models

- Text Generation with Generative Models

- Language Generation Models for Prompt Generation

- Chatbots with Generative AI Models

- Music Generation with Generative Models

- A Beginner’s Guide to Generative Design

- Video Generation with Generative Models

- Anime Generation with Generative Models

- Generative Adversarial Networks (GANs)

- Generative modeling using Variational AutoEncoders

- Reinforcement Learning for Generation

- Interactive Generative Systems

- Fashion Generation with Generative Models

- Story Generation with Generative Models

- Character Generation with Generative AI

- Generative Modeling for Simulation

- Data Augmentation Techniques with Generative Models

- Future Directions in Generative AI

Music Generation with Generative Models | Generative AI

Introduction

This is "Exploring Music Generation with Generative Models" ! This lesson explores the use of generative models to combine artificial intelligence and music. Learn how these algorithms may produce melodies and harmonies that are engaging and open doors for artists and programmers to explore new creative regions. Come explore with us the wonder of how innovative symphonies are created from simple lines of code.

Importance of Music Generation with Generative Models

Music Generation with Generative Models revolutionizes musical composition through AI. It generates diverse melodies, harmonies, and rhythms, fostering creativity, collaboration, and personalized musical experiences. This fusion of technology and art expands artistic horizons and drives innovation in AI research.

Let’s dive into these Music Generation with Generative Models

- MusicGen is a text-to-music model facebook/musicgen-small

Overview Music Generation Using facebook/musicgen-small

Text descriptions or audio cues are used by MusicGen, a text-to-music model, to genre-classify excellent music samples. With only one pass, all four codebooks are produced by this single-stage auto-regressive Transformer model that was trained over a 32kHz EnCodec tokenizer.

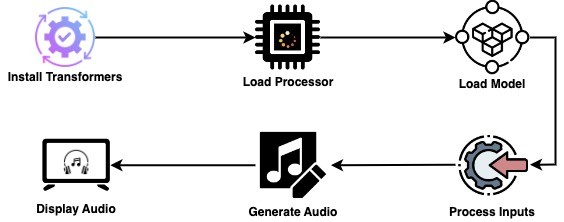

The Workflow:

Implementation of Music Generation Using facebook/musicgen-small

Transformers Usage

Let’s go through a simple code to understand things better:

Step 1: Installing Dependencies

!pip install git+https://github.com/huggingface/transformers.git

Step 2: Import Libraries

import scipy

from IPython.display import Audio

from transformers import AutoProcessor, MusicgenForConditionalGeneration

Step 3: Run the following Python code to generate text-conditional audio samples

Using textual descriptions of musical genres like 80s pop or 90s rock, this Python app uses a pre-trained model to produce music. It showcases AI's capacity to imaginatively create music out of text input.

processor = AutoProcessor.from_pretrained("facebook/musicgen-small")

model = MusicgenForConditionalGeneration.from_pretrained("facebook/musicgen-small")

inputs = processor(

text=["80s pop track with bassy drums and synth", "90s rock song with loud guitars and heavy drums"],

padding=True,

return_tensors="pt",

)

audio_values = model.generate(**inputs, max_new_tokens=256)

Step 4: Listen to the audio samples either in an .ipynb notebook

sampling_rate = model.config.audio_encoder.sampling_rate

Audio(audio_values[0].numpy(), rate=sampling_rate)

Step 5: save them as a .wav file using a third-party library, e.g. scipy

sampling_rate = model.config.audio_encoder.sampling_rate

scipy.io.wavfile.write("musicgen_out.wav", rate=sampling_rate, data=audio_values[0, 0].numpy())

Now, Audiocraft Usage

Step 1: Installing Dependencies

!pip install git+https://github.com/facebookresearch/audiocraft.gitMake sure to have ffmpeg installed

apt get install ffmpegStep 2: Import Libraries

from audiocraft.models import MusicGen

from audiocraft.data.audio import audio_write

Step 3: Load model & generate

Using a pre-trained MusicGen model, this Python code creates audio samples according to musical styles, stores them as.wav files, and applies loudness normalization. This code sheds light on the process of creating music using artificial intelligence.

model = MusicGen.get_pretrained("small")

model.set_generation_params(duration=8) # generate 8 seconds.

descriptions = ["happy rock", "energetic EDM"]

wav = model.generate(descriptions) # generates 2 samples.

for idx, one_wav in enumerate(wav):

# Will save under {idx}.wav, with loudness normalization at -14 db LUFS.

audio_write(f'{idx}', one_wav.cpu(), model.sample_rate, strategy="loudness")

Conclusion

AI-powered tools such as MusicGen and Audiocraft, coupled with generative models, allow musicians to easily experiment with a wide range of musical styles and genres. Enhancing individual musical experiences, these models create melodies, harmonies, and facilitate cooperation. We may explore new creative avenues and influence music in previously unheard-of ways by adopting AI-driven orchestras.