- Getting Started with Generative Artificial Intelligence

- Mastering Image Generation Techniques with Generative Models

- Generating Art with Neural Style Transfer

- Exploring Deep Dream and Neural Style Transfer

- A Guide to 3D Object Generation with Generative Models

- Text Generation with Generative Models

- Language Generation Models for Prompt Generation

- Chatbots with Generative AI Models

- Music Generation with Generative Models

- A Beginner’s Guide to Generative Design

- Video Generation with Generative Models

- Anime Generation with Generative Models

- Generative Adversarial Networks (GANs)

- Generative modeling using Variational AutoEncoders

- Reinforcement Learning for Generation

- Interactive Generative Systems

- Fashion Generation with Generative Models

- Story Generation with Generative Models

- Character Generation with Generative AI

- Generative Modeling for Simulation

- Data Augmentation Techniques with Generative Models

- Future Directions in Generative AI

Text Generation with Generative Models | Generative AI

Introduction

Generative models in natural language processing (NLP) have completely changed how humans write and comprehend text. These models are now essential to many different applications, ranging from chatbots to language translation systems. The complexities of creating text with generative models will be covered in detail in this course, along with information on their significance, method, and real-world application.

Importance of Language Models

Generative text generation relies on language models. These deep learning-powered models can comprehend, produce, and modify human language. For activities including sentiment analysis, summarization, text production, and more, they are essential. Language models can now handle increasingly complex text generation jobs thanks to the development of large-scale pre-trained models like GPT.

Let’s dive into text generation with generative models

- GPT-2

- GPT-4

Overview Generation Text with Generative Models GPT-2 and GPT-4

GPT-2: GPT-2, an OpenAI development, uses deep learning to produce human-like text. It excels in text completion, translation, and summarization, revolutionizing various industries like content generation and conversational AI.

GPT-4: OpenAI's GPT-4 is a robust LLM capable of handling complex tasks like email writing and code generation, adapting to specific tones, emotions, and genres, and interpreting 26 languages.

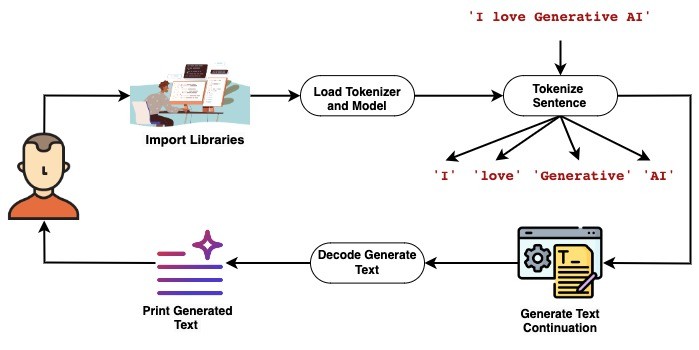

The Workflow:

Implementation of Generation Text Using GPT-2

Let’s go through a simple code to understand things better:

Step 1: Installation of OpenAI Library

!pip install openai

Step 2: Import Libraries

from transformers import GPT2Tokenizer, GPT2LMHeadModel

Step 3: Convert the sentences into the tokens

The Code initializes GPT-2 tokenizer and model ('gpt2-large'). Handles tokenization and model setup, including end-of-sequence token. Enables text processing and generation using GPT-2's NLP capabilities.

tokenizer = GPT2Tokenizer.from_pretrained('gpt2-large')

model = GPT2LMHeadModel.from_pretrained('gpt2-large', pad_token_id=tokenizer.eos_token_id)

tokenizer.eos_token_id

tokenizer.decode(tokenizer.eos_token_id)

Step 4: Text Tokenization and Decoding

The code converts the sentence "I love Generative AI" into numerical tokens and decodes a specific token, enabling conversion between text and numerical formats, vital for natural language processing tasks.

sentence = 'I love Generative AI' numeric_ids = tokenizer.encode(sentence, return_tensors = 'pt') numeric_ids tokenizer.decode(numeric_ids[0][3])

Step 5: Generate the text given the sentence

The code uses GPT-2 to generate text continuation from input. It controls generation with parameters like max length and beam search. After decoding, it prints the resulting text, illustrating GPT-2's natural language processing capability.

result = model.generate(numeric_ids, max_length = 100, num_beams=5, no_repeat_ngram_size=2, early_stopping=True) result generated_text = tokenizer.decode(result[0], skip_special_tokens=True) print(generated_text)

result

Generated Output Text:

"I love Generative AI, but I don't think it's the right way to go about it. I think we need to think about how we're going to use AI in the real world, not just in games."

"I think there are a lot of things that we can do to make the world a better place," he continued. "I'm a big fan of the idea of using AI to improve the quality of life for people."

Implementation of Generation Text Using GPT-4

Let’s go through a simple code to understand things better:

Step 1: Importing Necessary Libraries

OpenAI's API, driven by models like GPT-3, GPT-4 allows developers to generate human-like text with ease. This advanced technology finds applications in chatbots, content creation, translation, and more, making it accessible for diverse software integrations.

import os

import openai

Step 2: Setting Up OpenAI API Key

# Replace with your OpenAI API key

openai.api_key = os.environ.get("OPENAI_API_KEY")

Step 3: Initializing OpenAI Client

from openai import OpenAI

client = OpenAI(api_key='Typing your-api-key')

Step 4: Defining Function to Generate API Validation Tests

The function `generate_api_validation_tests` utilizes OpenAI's GPT-4 model to create validation tests based on an API description. It accepts parameters for the description, maximum tokens, and temperature to control text length and randomness. The function then returns the generated validation tests.

def generate_api_validation_tests(api_description, max_tokens=20, temperature=0.7):

response = client.chat.completions.create(

model="gpt-4",

messages=[

{"role": "system", "content": "Text Generate"},

{"role": "user", "content": api_description}

],

max_tokens=max_tokens,

temperature=temperature

)

return response.choices[0].message.content

Step 5: Generating API Validation Tests and Print the generated text

The provided code generates API validation tests using OpenAI's GPT-4 model based on the description "I love Generative AI," with a maximum of 20 tokens and a temperature of 0.5. It then prints the generated validation tests.

api_description = "i love Generative AI"

api_validation_tests = generate_api_validation_tests(api_description, max_tokens=20, temperature=0.5)

print(api_validation_tests)

Generated Output Text

Generative AI is indeed a fascinating field. It's a type of artificial intelligence that's capable of

Conclusion

In short, generative models like GPT-2 and GPT-4 have revolutionized text generation in NLP, offering remarkable capabilities in understanding and producing human-like text. With accessible APIs like OpenAI's, their impact spans various industries, promising further advancements in language understanding and communication.